DAQRIcompany announces a new milestone in the project augmented reality tools open source AR SDK, ARToolKit. The Americans started to open beta ARToolKit-5 on Linux, Android, iOS, macOS, and Unity. New updates abound functional part and distributed Apache v2.0 license so that developers can create their own applications with minimal licensing restrictions.

ARReverie added a new tracking method which will detect an ImageTarget without creating external Marker database by combining multiple tech together such as P3P and optical flow for a more reliable reading key.

The system recognizes and track supports multiple images based on detection initialization at speeds of tens of milliseconds. Calibration, identification, and tracking of fully autonomous kept up to hundreds of detected images; working dynamic loading and saving individual images or sets.

ARToolKit is an open-source computer tracking library for creation of strong augmented reality applications that overlay virtual imagery on the real world. Currently, it is maintained as an open-source project hosted on GitHub. ARToolKit is a very widely used AR tracking library with over 160,000 downloads on its last public release in 2004. ARToolKit MMD tutorial virtual 3D Good morning all my friend. Pagi ini saya pengen bagi-bagi informasi mengenai cara membuat sebuah vitual 3d di dalam komputer. Tapi dengan latar kamar kita. Dengan bantuan camera untuk menangkap objek background yang kita inginkan dan selanjutnya di dalam komputer akan dilakukan virtualisasikan ke dalam 3d.

ARTOOlkit+ For Unity:

ARReverie provides ARToolKit+ plugin for Unity brings the full functionality of ARToolKit into the Unity3D visual development environment. It consists of a set of Unity script components which integrate seamlessly with your Unity project, native plugins which implement the underlying core functionality, and a set of examples and accompanying resources that act as starting points.

Note: Plugin cost 45$(One Time) only and this amount will help the ARReverie Technology to explore the latest tech and transfer the knowledge.

System Requirements (Development & Deployment)

# Development Requirements:

- Mac OS X min. Sierra (version 10.12.3)

- X-Code min. Version 8.2.1 (Optional)

- Android Studio v2.1 and later (Optional)

- Unity3D (min. 5.5 installed on a macOS X), the free, Personal Edition, is sufficient for a start

- The Unity3D plugin of ARToolKit+: ARToolkit+ UnityPackage

# Development Requirements / Supported Target Platforms:

- iOS v7.0 and later (armv7, armv7s, arm64)

- Android v4.0.3 and later (armeabi-v7a, x86)

- MacOS v10.7 and later (x86_64)

Let’s Start Developing an application using ARToolKit + Unity

As a quick start with Unity3D and ARToolKit+, we are using one of the default example scenes shipped with the ARToolKit+ Unity package. This Tutorial describes how to create and demonstrate augment reality application using the Unity3D IDE and the ARToolKit+ for Unity SDK.

For the first tutorial, we’re going start from scratch and create an application that recognizes an image exposed to the computer’s/Mobile’s camera, tracks the image and augments a 3D model in the video feed over the tracked image.

Prerequisite (Tools Needed):

As discussed earlier Support for ARToolKit+ for Unity and the Unity3D IDE on Windows 10 is under test and is forthcoming. “MacOS based Unity3D IDE” is recommended.

- If you intend to build and deploy on Android, you’ll need to install Android SDK, which must include the NDK, Android Studio or Android command-line tools (for example, the Android ADB).

- If you intend to build and deploy on iOS you’ll need to install XCode.

Here in this tutorial, we are targeting Android as a deployment Platform, We will be using ARToolkit+ AR SDK by ARReverie and Unity 3D engine and Android SDK for this tutorial. Make sure you have downloaded the following before proceeding further.

- Unity Engine (Version 2017.3 I’m using now)

- ARToolkit+ V2.0 SDK for Unity( ARToolkit-Unity 6.0.2-Development)

- ARToolkit Camera Calibration for macOS (Camera Calibration and ImageTarget Manager for OS-X)

- Android SDK & Android Studio (Optional)

Step by Step Implementation

1. Setup a Simple Unity Project:

- Unity is a powerful and widely used game engine. Let’s start by creating a new Unity3D project by the name of “ARToolKit_ImageTarget”. (Disable Unity Analytics for now)

- Start Unity and enter a project name, browse to a location, enable the 3D radio button and click ‘Create Project’ button.

2. Download & Install ARToolkit+ “Calibration Tool” for your Mac machine:

- Download: “ARToolkit_Plus_macOS.dmg“

- Open download folder and run “ARToolKit_Plus_macOS.dmg” on your Mac Machine.

- Once make sure that “ARToolKit for macOS “ properly get installed on your MacBook.

- Now navigate to “/Applications/artoolkit6/SDK/Applications/” through your Finder and open “ARToolKit6 Image Database Utility”

- “ARToolKit+ Image Database Utility” is an offline utility provided by ARToolkit which help us to create/manage ImageTarget Database.

3. Import ARToolKit+ Unity packages inside Unity Asset Store:

- Import the custom UnityPackage / ARToolKit+ Unity Extension/ARToolKit+ SDK (ARToolkit+ Unity Package)

- To import a new custom package:

- Choose Assets > Import Package > Custom Package… to bring up File Explorer (Windows) or Finder (Mac).

- Select the package you want from Explorer or Finder, and the Import Unity Package dialog box displays, with all the items in the package pre-checked, ready to install. (See Fig 4: New install Import Unity Package dialog box.)

Select Import and Unity puts the contents of the package into the Assets folder, which you can access from your Project View.

- If any “API Update” related dialog box appears, just click on “I Made a Backup” and go ahead.

4. Open Unity-Scene “2DTrackerTest” from ARToolkit inside Unity Project:

- Open Scene ‘2DTrackerTest’ inside Project window

- Project >> Test Scene >>2DTrackerTest.unity

- Then you are now able to see some game objects “ARToolkit“, “ISceneRoot“, “Marker scene 0” etc. inside Hierarchy window

- Delete “Marker scene 0”, “Marker scene 2”, “Marker scene 3” from Hierarchy window as shown in fig. below.

IMP NOTE: ARToolKit+ support Mac, Android, iOS or Linux as a deployment platform so we need to build the project for any of them inside Unity build settings. If there any error occurs like ‘ARToolKit+: Build target not supported’ just ignore it for now.

5. Import 3D model (Spider) from Unity Assets Store:

Now the fun part begins!!! let’s download 3D model with some animations for our augmented reality. For this tutorial, we choose one Green Spider from Unity Asset Store (free).

- You can open the Asset Store window by selecting ‘Window > Asset Store’ from the main menu. On your first visit, you will be prompted to create a free user account which you will use to access the Store subsequently.

- Navigate to: ‘Window > Asse Store’ OR Select ‘Asset Store Tab’

- In Asset Store window search for the keyword “Spider Green” and download the same, as shown in the screenshot below.

6. Add 3D model (Spider) Inside ImageTarget in Unity Scene

Afterward, we introduce our 3D model (downloaded from Asset Store) into parent ImageTarget, to do this:

- Expand ‘Spider Green’ folder inside Unity Project window

- For the 3D Spider to appear over ImageTarget/Marker, it needs to be made a child of “Marker scene1” (can be done by just dragging the SPIDER prefab in the Hierarchy panel).

- Then, whenever the ImageTarget is detected by a mobile device’s camera, all the children of the target will also appear together.

- Simply Drag &Drop SPIDER prefab inside ‘Marker scene 1’ in Hierarchy window.

- Delete all ‘Cylinder’ and ‘Cone’ GameObjects from Hierarchy window as shown in fig. below.

- Setup Spider Prefab: For ‘Spider’ 3d model maintain the Position, Rotation & Scale properties (mostly default)

- Position: X=0, Y=0, Z=0

- Rotation: X=-99.9, Y=0, Z=0

- Scale: X=0.03, Y=0.03, Z=0.03

NOTE: This AR app will work on ImageTarget/Marker_Image even with a black and white image of the stones because the feature points will still remain (they usually depend on other factors like gradient, etc. instead of color).

You can download Marker Image from here. The target image is required that your computer’s camera will recognize and track while demonstrating ARToolKit6 Augmented Realty example applications.

7. Android Deployment Setup to Run App on Device:

We are almost done. Let’s save the scene: File >> Save Scene and move towards deployment step. At this point, if we select “Run” and point a print out of the image towards our webcam, the Spider get augmented over printout.

The last step is to build the project for Android. We need to go to “File >> Build Settings”. We need to add the current scene by selecting “Add Open Scene”. Then, we need to select a platform (Android) and then select “Switch Platform“. Here, we will have multiple options:

- Export Project: This will allow us to export the current Unity project to Android Studio so it can be edited and used to add more elements.

- Development Build: Enabling this will enable Profiler functionality and also make the Autoconnect Profiler and Script Debugging options available.

Unity provides a number of settings when building for Android devices – select from the menu (File > Build Settings… > Player Settings…) to see/modify the current settings.

Before pressing the Build And Run, we need to make some more changes in the “Player Settings” options in the Inspector panel:

- The Company Name needs to be changed; e.g. to “ARReverie”.

- Need to change the “Package Name” under “Identification” say, to “com.arreverie.artoolkitdemo”.

Now, we can proceed to “Build and Run”. Other changes like Minimum API Level etc. can be made as per additional requirements. We will need to connect an Android mobile device via USB and enable USB debugging.

NOTE: We will also need to have an Android developer environment set up before we can test our Unity games on the device. This involves downloading and installing the Android SDK with the different Android platforms and adding our physical device to our system (this is done a bit differently depending on whether we are developing on Windows or Mac).

If you get stuck at any point, feel free to post us in the comment section below.

‘If you stuck at any point’ OR ‘Do you have any questions about this tutorial?’ OR ‘Do you have any suggestions for a new AR tutorial?’ Let me know in the comments section below.

Best of luck 🙂

Artoolkit Tutorial

This article discusses APIs that are not yet fully standardizedand still in flux. Be cautious when using experimental APIs in your own projects.

Introduction

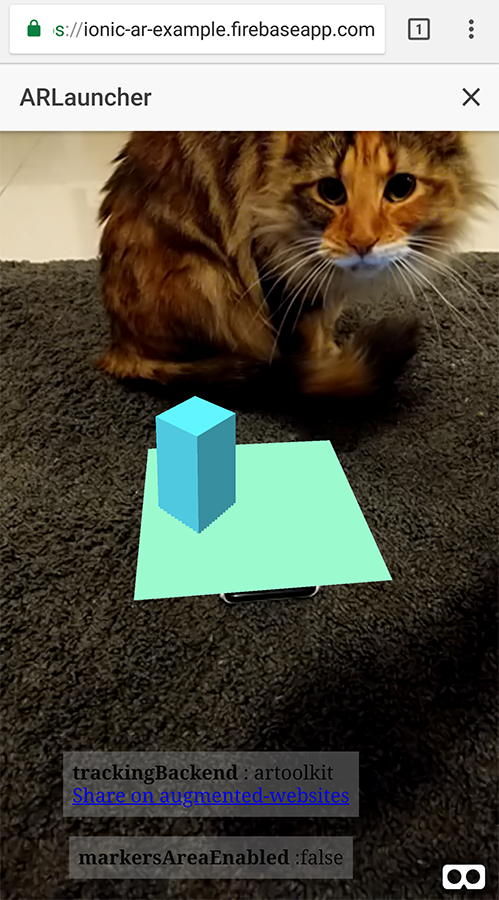

This article is about using the JSARToolKit library with the WebRTC getUserMedia API to do augmented reality applications on the web. For rendering, I'm using WebGL due to the increased performance it offers. The end result of this article is a demo application that puts a 3D model on top of an augmented reality marker in webcam video.

JSARToolKit is an augmented reality library for JavaScript. It's an open source library released under the GPL and a direct port of the Flash FLARToolKit that I made for the Mozilla Remixing Reality demo. FLARToolKit itself is port of the Java NyARToolKit, which is a port of the C ARToolKit. Long way, but here we are.

JSARToolKit operates on canvas elements. As it needs to read the image off the canvas, the image needs to come from the same origin as the page or use CORS to get around same-origin policy. In a nutshell, set the crossOrigin-property on the image or video element you want to use as a texture to ' or 'anonymous'.

When you pass a canvas to JSARToolKit for analysis, JSARToolKit returns a list of AR markers found in the image and the corresponding transformation matrices. To draw a 3D object on top of a marker, you pass the transformation matrix to whatever 3D rendering library you're using so that your object is transformed using the matrix. Then, draw the video frame in your WebGL scene and draw the object on top of that and you're good to go.

To analyze video using the JSARToolKit, draw the video on a canvas, then pass the canvas to JSARToolKit. Do this for every frame and you've got video AR tracking. JSARToolKit is fast enough on modern JavaScript engines to do this in realtime even on 640x480 video frames. However, the larger the video frame, the longer it takes to process. A good video frame size is 320x240, but if you expect to use small markers or multiple markers, 640x480 is preferable.

Demo

To view the webcam demo, you need to have WebRTC enabled in your browser (on Chrome, go the about:flags and enable MediaStream). You also need to print out the AR marker below. You can also try opening the marker image on your phone or tablet and showing it to the webcam.

Here is a demo of what we're aiming for. This demo creates an image slideshow using AR markers. Show a marker to the camera and it will place a photo on it. Move the marker out of the camera's view and show it again and the image has changed.

Setting up JSARToolKit

The JSARToolKit API is quite Java-like, so you'll have to do some contortions to use it. The basic idea is that you have a detector object that operates on a raster object. Between the detector and the raster is a camera parameter object that transforms raster coordinates to camera coordinates. To get the detected markers from the detector, you iterate over them and copy their transformation matrices over to your code.

The first step is to create the raster object, the camera parameter object and the detector object.

Artoolkit Tutorial For Beginners

Using getUserMedia to access the webcam

Next, I'm going to create a video element that's getting webcam video through the WebRTC APIs. For pre-recorded videos, just set the source attribute of the video to the video URL. If you're doing marker detection from still images, you can use a image element in much the same way.

As WebRTC and getUserMedia are still new emerging technologies, you need to feature detect them. For more details, check out Eric Bidelman's article on Capturing Audio & Video in HTML5.

Detecting markers

Once we have the detector running a-ok, we can start feeding it images to detect AR matrices. First draw the image onto the raster object canvas, then run the detector on the raster object. The detector returns the number of markers found in the image.

The last step is to iterate through the detected markers and get their transformation matrices. You use the transformation matrices for putting 3D objects on top of the markers.

Matrix mapping

Here's the code to copy JSARToolKit matrices over to glMatrix matrices (which are 16-element FloatArrays with the translation column in the last four elements). It works by magic (read: I don't know how ARToolKit matrices are setup. Inverted Y-axis is my guess.) Anyway, this bit of sign-reversing voodoo makes a JSARToolKit matrix work the same as a glMatrix.

To use the library with another library, such as Three.js, you need to write a function that converts the ARToolKit matrices to the library's matrix format. You also need to hook into the FLARParam.copyCameraMatrix method. The copyCameraMatrix method writes the FLARParam perspective matrix into a glMatrix-style matrix.

Artoolkit Tutorial

Three.js integration

Artoolkit

Three.js is a popular JavaScript 3D engine. I'm going to go through how to use JSARToolKit output in Three.js. You need three things: a full screen quad with the video image drawn onto it, a camera with the FLARParam perspective matrix and an object with marker matrix as its transform. I'll walk you through the integration in the code below.

Here's a link to the Three.js demo in action. It has debugging output enabled, so you can see some internal workings of the JSARToolKit library.

Summary

In this article we went through the basics of JSARToolKit. Now you are ready to build your own webcam-using augmented reality applications with JavaScript.

Integrating JSARToolKit with Three.js is a bit of a hassle, but it is certainly possible. I'm not 100% certain if I'm doing it right in my demo, so please let me know if you know of a better way of achieving the integration. Patches are welcome :)